DynamoDB has the ability to export entire tables or data from specific periods to S3.

The exported data can be imported as a separate table or queried with Athena.

When the purpose of the export is for long-term storage or data analysis, you may often want to export to a development environment that is separate from the production environment where DynamoDB is running.

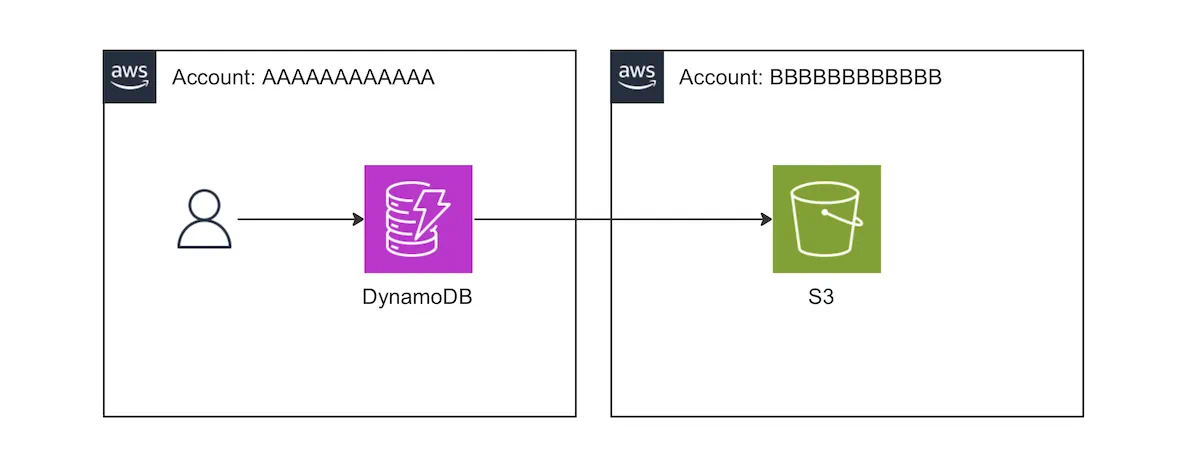

In this article, we will introduce how to export a DynamoDB table to an S3 bucket in a different account.

Method

The conclusion is as written in the official documentation below.

However, this time we will focus on exporting from DynamoDB in account AAAAAAAA to S3 in account BBBBBBBB.

Basically, you will:

- Enable point-in-time recovery for DynamoDB

- Grant permissions to the user in the source account (AAAAAAAA)

- In the destination account (BBBBBBBB), grant a bucket policy that allows access to the bucket from AAAAAAAA

- When exporting from the CLI or code, specify the bucket owner in the arguments (← This is where I got stuck)

- For AWS CLI, use

--s3-bucket-owner - For boto3 (Python), use

S3BucketOwner

- For AWS CLI, use

Enabling Point-in-Time Recovery (PITR)

DynamoDB has a mechanism called point-in-time recovery (PITR).

We won’t go into detail here, but having this enabled is a prerequisite for exporting to S3.

If using Terraform

resource "aws_dynamodb_table" "example" {

# ~~ Omitted ~~

point_in_time_recovery = "enabled"

}

Reference: Resource: aws_dynamodb_table - Terraform Registry

If using CloudFormation

---

Type: "AWS::DynamoDB::Table"

Properties:

# ~~ Omitted ~~

PointInTimeRecoverySpecification:

PointInTimeRecoveryEnabled: true

Reference: AWS::DynamoDB::Table - AWS CloudFormation

IAM Policy to Set at the Source

Attach an IAM policy to the entity that will execute the export. If a person is exporting from the console, attach it to that user; if exporting from Lambda, attach it to the IAM role of that Lambda.

It’s a copy-paste from the official documentation, but the following policy will suffice (change the ARN of the DynamoDB table and S3 bucket as appropriate).

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AllowDynamoDBExportAction",

"Effect": "Allow",

"Action": "dynamodb:ExportTableToPointInTime",

"Resource": "arn:aws:dynamodb:us-east-1:111122223333:table/my-table"

},

{

"Sid": "AllowWriteToDestinationBucket",

"Effect": "Allow",

"Action": [

"s3:AbortMultipartUpload",

"s3:PutObject",

"s3:PutObjectAcl"

],

"Resource": "arn:aws:s3:::your-bucket/*"

}

]

}

Bucket Policy to Set at the Destination

When exporting across accounts, you need to allow access to the S3 bucket from the source. Set the following policy on the S3 bucket (copy-paste from the official documentation).

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "ExampleStatement",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::123456789012:user/Dave"

},

"Action": [

"s3:AbortMultipartUpload",

"s3:PutObject",

"s3:PutObjectAcl"

],

"Resource": "arn:aws:s3:::awsexamplebucket1/*"

}

]

}

The above example grants permission to a user named Dave, but if you want to assign permission to a role, such as Lambda, this won’t work.

You might think you can do it by specifying the role’s ARN in Principal, but that’s not the case, so be careful.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "ExampleStatement",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::AAAAAAAAAAAA:root"

},

"Action": [

"s3:AbortMultipartUpload",

"s3:PutObject",

"s3:PutObjectAcl"

],

"Resource": "arn:aws:s3:::dynamodb-export-bucket/*"

}

]

}

Specifying the Bucket Owner

If you think this is just a case of cross-account access, you might miss this point, but when exporting from DynamoDB to S3, you need to explicitly specify the bucket owner.

For AWS CLI:

aws dynamodb export-table-to-point-in-time \

--table-arn arn:aws:dynamodb:ap-northeast-1:AAAAAAAAAAAA:table/TableName \

--s3-bucket dynamodb-export-bucket \

--s3-bucket-owner BBBBBBBBBBBB

As of February 2024, this has been confirmed with the latest aws-cli 2.15.22. For more accurate information, please check the latest documentation.

参考: export-table-to-point-in-time - AWS CLI Command Reference

For boto3

dynamodb_client.export_table_to_point_in_time(

TableArn='arn:aws:dynamodb:ap-northeast-1:AAAAAAAAAAAA:table/TableName',

S3Bucket='dynamodb-export-bucket',

S3BucketOwner='BBBBBBBBBBBB'

)

As of February 2024, this has been confirmed with the latest Boto3 1.34.47. For more accurate information, please check the latest documentation.

参考: export_table_to_point_in_time - Boto3 documentation

Summary

This article summarized how to export data from DynamoDB to an S3 bucket in another account. In particular, the last point, “Specifying the Bucket Owner,” is easy to overlook, so please be careful.